Showcase

We proudly to present the exceptional know-how and remarkable abilities of our institute. This platform is dedicated to highlighting the innovative projects, groundbreaking research, and skilled expertise of our talented faculty and students. Here, you will discover a rich tapestry of accomplishments that reflect our commitment to excellence and our passion for advancing knowledge in various fields. Whether it's through cutting-edge technology, creative problem-solving, or collaborative endeavors, our institute is at the forefront of driving change and making a significant impact. Join us in exploring the diverse showcases that exemplify our mission to inspire, educate, and lead in today’s dynamic landscape.

Showcase

In our latest showcase, we present the remarkable advancements in affective computing that highlight our expertise and innovative capabilities in this dynamic field. By integrating cutting-edge algorithms and machine learning techniques, we have developed systems that can accurately recognize and interpret human emotions through facial expressions, voice intonations, and physiological signals. Our demonstrations feature real-time applications that enhance user experience in various domains, including healthcare, education, and customer service. Attendees will witness how our technology fosters empathetic interactions and improves emotional wellbeing, ultimately creating more meaningful human-computer relationships. Join us as we explore the future of affective computing and its potential to transform how we connect with machines and each other.

VR based Dialog System as Depression Intervention

VR based simulation of a dialog between the boss and the depressed employee.

Dialect Recognition using Deeplearning

Dialect recognition of different Arabic and non-Arabic dialects. Deeplearning models are used to detect the different Arabic and non-Arabic dialects in real time.

AI based dangerous objects segmentation (Knife and Scissor)

Affect Burst: Audio based Pain Detection

Pain detection using affect bursts and deeplearning models in real time.

AI therapist for OCD treatment

Dialog with AI therapist to calm patient with OCD.

Segmentation of dangerous objects such as knife and scissors. Deeplearning models are used to segment the objects of interest.

Realtime Pose Detection for Speaker State Recognition

Recognition of various poses using deeplearning models. The deeplearning model differentiates between five different poses (and thus infers Speaker States: Confidence, Scepticism, Relaxation)

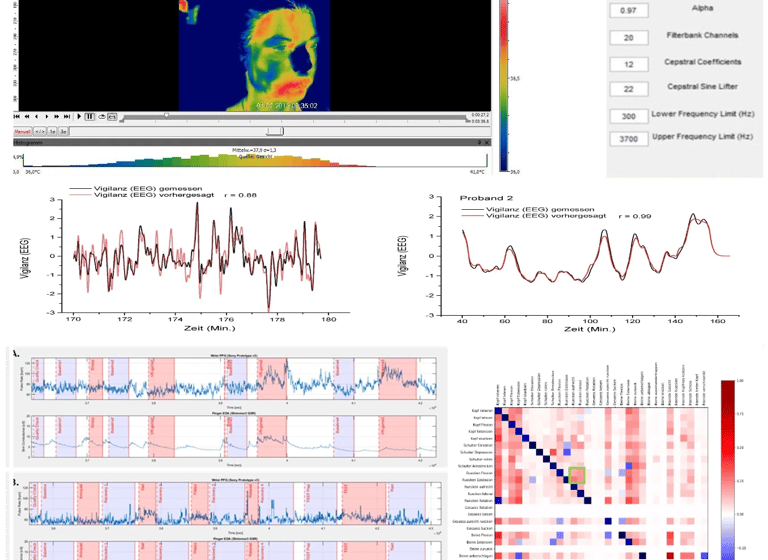

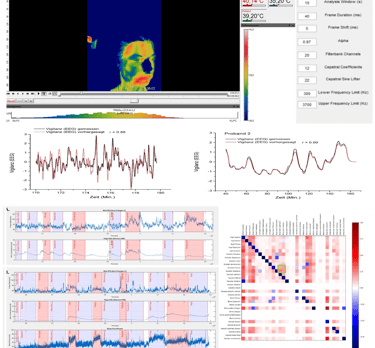

EEG based prediction of intensity of Microsleep

Two EEG channels are used to predict the intensity of microsleep using deeplearning model in a driving environment.

Dry cough detection

Detection of dry cough using deeplearning models in real time.

Audio Synthesis of Chewing Sounds triggered by Chewing Motions

Visual expressions are used to detect chewing movements. Chewing movements are used to create crunchy sounds. Computer Vision is used to detect the chewing movements and the detected movements are then translated to chewing sounds.

Speech Dialog System for Realtime Violence Detection

Real-time description of violent scenes represents an innovative method to analyze video content using computer vision and AI systems. Such systems can be of great use in various fields, such as security surveillance, journalism or in legal contexts, by providing a better understanding of violent situations.